I have been involved in a lot of site redesigns, both as part of the delivery team and as a post-launch fixer. I continue to be surprised at the number of mistakes developers make, many which have negative SEO impacts. Here is my running list, which helps me when I audit sites or work with developers.

Server Files & Configuration

There are a number of elements of your Web server’s configuration that can be missed when a new server instance is created, and these are not immediately obvious unless you go looking for them.

Robots.txt

This is a simple file yet with the power to significantly impact your organic traffic. The most common scenario here is that the developer puts a “disallow all bots” record in there when the site is being built. This reduces the chances that search engine crawlers will index a site under development, which is a good thing. But then the dev server becomes the production server and the robots.txt file setting has been forgotten. Now you have a production site that’s blocking crawlers.

Protocol and Subdomain

A common error is the incomplete handling of either or both the protocol (http vs. https) and subdomain (www vs. non-www). Basically, every combination should redirect to the a single cofiguration. With Google’s emphasis on security, every site should be using https. So you want to make sure that every “http” request 301 redirects to its “https” counterpart. You also don’t want both www and non-www versions of your URLs indexed by search engines. The www requests should redirect to their non-www counterparts, or vice versa. Whichever one you choose, make sure all requests redirect to it (verify that it works for interior pages as well as the home page).

Note that this applies to all legacy and alternate domains, too. If you have old domains that redirect to your current domain, or are combining sites from multiple domains into one, you need to verify that all variants of each one redirect properly. I had a case where just the http variant of a legacy domain was not redirected properly, and Google indexed an entire copy of the production site under that legacy domain.

Root Directory Files

One of the ways to authenticate with Google Search Console is to place a key file in the root directory of the site. If present, migrate these to the new site to save the inconvenience of having to re-authenticate.

Also make sure your favicon.ico file gets moved over.

Redirects

Probably the most frequent site redesign issue I encounter is the mis-handling (or omission) of redirects. This can have anywhere from a significant to a disastrous SEO impact on your site. Here’s how to plan and execute a redirect strategy. This is started in conjunction with the development of the site Information Architecture. It’s not done one week before launch!

The process:

- Create a Master Content List from the superset of URLs found by combining page lists from Google Analytics, Google Search Console, and your own crawl. Using Search Console and crawl data will make sure you don’t miss PDFs and other pages that might not show in your Analytics data. Using Analytics data makes sure you include pages that might not be linked to from other pages (e.g., campaign landing pages).

- In Excel or Google Sheets combine Analytics and Search Console performance data, so that you can see organic impressions, organic clicks, pageviews, entrances, and other key metrics data for each URL. Also, you can add link data from Search Console, SEMrush, Moz, etc. You may have pages that don’t see a ton of traffic but are still valuable for their link strength.

- It’s also a good idea to revisit your legacy redirects, the ones from previous versions of your sites to the current version. Add those legacy URLs to your Master Content List and plan updated redirects for them. This helps you avoid having those URLs going through double or even triple redirects.

- Create columns for “Redirect Y/N?” and “Redirect to:”. Review each URL and determine if you’re going to redirect the page or not. To aid your decision-making, you have traffic, link, and performance data for each page. If you choose to leave content behind and not redirect it, at least you have data to show you what you’re giving up.

I usually create the Master Content List in a Google Sheet or other shared format that makes it easier for a team to work with. (Plus I can use tools like Supermetrics to pull in updated Analytics and Search Console data throughout the process.)

CMS Configuration

NOINDEX Meta Tag

WordPress has a feature that lets you NOINDEX all of your pages with the check of a single box. Like a robots.txt exclusion (except even more powerful), developers might set this to prevent a dev site from being indexed. But it, too, can get left that way when the site becomes the production version and search engine crawlers won’t index the new pages, crushing your organic traffic.

XML Sitemap

Most modern CMSs will automatically generate an XML sitemap for search crawlers to use. Make sure this setting is enabled, and that your robots.txt file has a record pointing to the new sitemap file.

Missing Landing Pages

Sometimes landing pages are purposefully excluded from the site navigation. You might miss them when planning the information architecture of the new site because they’re not linked to from other pages. When you’re building a content list for migration, don’t rely just on data from a crawl. Review all of your digital marketing campaign landing pages.

Analytics

Most likely the redesign of your site will impact your analytics setup. Of course you’ll want to verify that your analytics code is actually present on the new site. I’ve seen it left off many times, causing the loss of important traffic data. In addition to verifying that the core analytics code is working, consider other elements of the configuration:

- Update conversions/goals. These can rely on page URLs or events to trigger, both of which may have changed.

- Correct analytics account — some designers will point a development site to a different analytics account, so that the test data doesn’t mix with the production data. There are better ways to do this (like view filters). Make sure the data is going to the right account.

- Update events, form tracking, etc. — if you have analytics events fired via custom code or a tag manager you’ll likely need to update the implementation to work with the new site. Plus, the new site may have additional interactions that you want to set up as new events.

Paid Campaigns

Don’t forget about campaigns you have running and the pages they land on. Change the landing page URLs in your ad platform, as they have likely changed from the previous version of the site. You don’t want to pay for traffic to a 404 error page. (Google Ads will send you a nice email informing you that your ads have been disapproved because the landing pages aren’t working.) It’s not a bad idea to pause your paid campaigns for a day or so during the site transition. That gives you a chance to make sure everything is working OK.

Google Search Console

If your new site uses a different protocol or subdomain, make sure you set up Google Search Console (GSC) to track the new configuration. GSC treats https, http, www, and non-www variants as different sites.

Quality Checks

Make yourself a pre-launch checklist of things to verify, including all of the applicable things above. Once the new site is launched there are a lot of things to check. One of the best tools at your disposal is a good crawler, like Screaming Frog. You can use it to:

- Crawl for broken links

- Find hard-coded links to dev site URLs

- Verify your redirects (by uploading a list of legacy URLs for it to crawl and check)

- Audit your page titles, descriptions, etc.

Set up “HTTP 404 Error” report in your analytics tool, and monitor it daily. (For example, you can filter on “page title contains ‘not found’.) Monitor this daily and supplement that with Crawl Errors data from Search Console. Implement redirects for important pages that you missed which still result in a 404 errors.

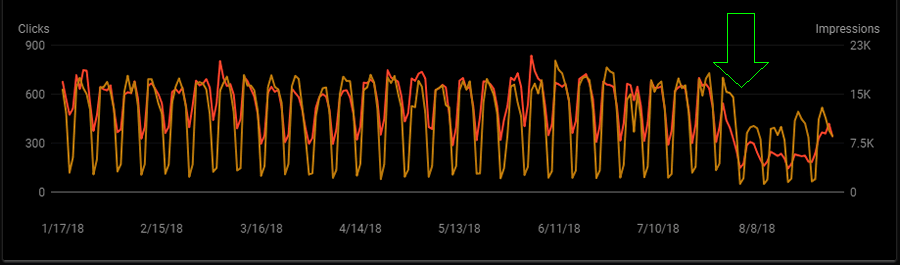

Use your analytics tool to monitor your traffic daily for a couple weeks after the site is launched. If there are SEO problems, they’ll become visible within a week, or so.